Smart Eye and OmniVision Announce End-to-End Interior Sensing Solution

Smart Eye AB, and OmniVision Technologies have jointly announced a full Interior sensing solution for automotive OEMs that enables complete driver and cabin monitoring with videoconferencing applications from a single RGB-IR sensor.

The solution is the first integrated video processing chain, which combines innovative features based on the OmniVision OV2312 RGB-IR sensor, supporting exceptional day and night performance.

“Interior Sensing AI is crucial for the automotive industry. Not only is this technology improving automotive safety – saving human lives around the world – it is also enabling automakers to provide differentiated mobility experiences that enhance wellness, comfort and entertainment,” says Martin Krantz, Founder and CEO of Smart Eye. “By partnering with OmniVision, we are delivering on this vision: providing an end-to-end, highly advanced Interior Sensing system that meets the demands of automotive OEMs, at a price point that makes it viable for the mass market.”

“Empowered by our OmniPixel®3-GS pixel technology, the OV2312 is a 2.1MP, RGB-IR, global shutter image sensor that was designed specifically for interior applications, and it strikes a balance with MTF, NIR QE, and power consumption. We are proud to partner with Smart Eye to enable this accurate full interior sensing solution,” says Brian Pluckebaum, automotive product marketing manager at OmniVision.

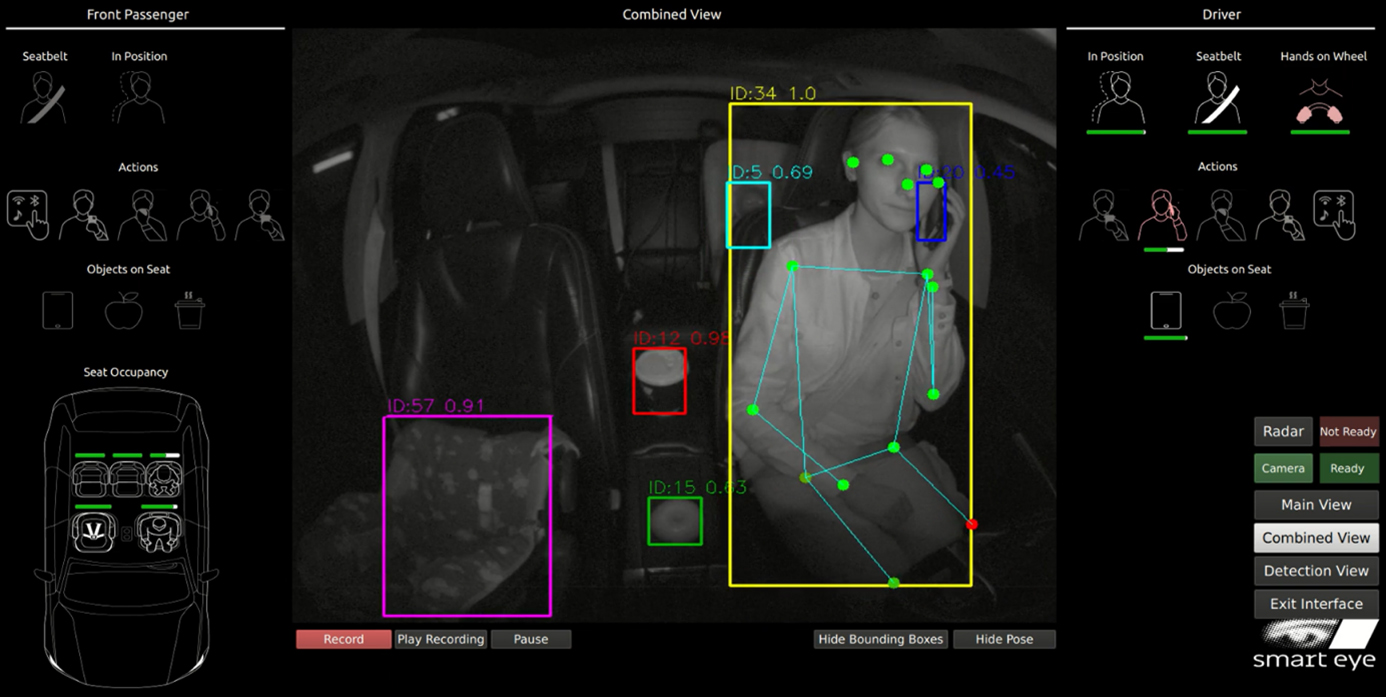

Smart Eye’s AI-based eye, mouth and head tracking technology provides EuroNCAP performance linked with full cabin monitoring and driver monitoring, featuring distraction, drowsiness and incapacitated driver detection, combined with driver identification and spoof-proof processing. The cabin monitoring also includes occupancy detection for all seats, combined with out of position, seat belt status and forgotten baby detection. The action detection allows an understanding of occupant actions like driver hands on steering wheel, interaction with mobile device, calling, drinking, and eating. These actions may have an impact on the interaction between the vehicle and the occupant and will be a pre-requisite for higher levels of vehicle autonomy.