Microprocessor architecture choice in the age of AI: exploring ARM and RISC-V

In a world where the everyday devices we use are based on programmable

silicon, choosing the right microprocessor architecture is key to

delivering a successful product. In the age of AI, the microprocessor

selection for anchoring an AI solution is especially important.

BY GOPAL HEGDE, SENIOR VICE PRESIDENT OF ENGINEERING AND OPERATIONS AT SIMA.AI.

INNOVATORS building their own silicon are familiar with the process of selecting an Instruction Set Architecture (ISA) that dictates how the instructions and data types a CPU uses to perform calculations, manage data and interact with memory and other components in a given product. It is also important to understand the ecosystem of design implementations, tools and extended software support, as well as the flexibility of the licensing options.

For AI/ML products, other considerations include support for data types used in AI/ML and native instructions to accelerate AI/ML applications.

While x86 ISA has dominated the computing market, x86 CPU core licenses are widely available outside of the Intel foundry ecosystem. There are two primary players in the ISA space with licensable ISA and strong ecosystems: ARM and RISC-V. ARM boasts a legacy of domination in the embedded device market, while open source RISC-V claims to be the architecture of choice for flexibility desired by emerging AI companies. These factors are largely determining their adoption and how they are used in AI systems.

ARM and RISC-V side-by-side

ARM emerged in the 1990s and has become ubiquitous due to its energy efficiency, broad ecosystem support, flexible licensing terms and integrated design implementations. The general RISC design philosophy prioritizes a reduced number of instruction classes, parallel pipeline units and a large general-purpose register set, though ARM has further evolved this with extensions. The ARM processor has been specially designed to reduce power consumption and extend battery operation, and contains features for multi-threading, co-processors and higher code density, along with comprehensive software compilation and hardware debug technology.

ARM is a licensable IP, allowing many companies to implement custom ARM-based designs into their products. These license fees fund ongoing ARM developments and allow the company to continue improving its technology, such as the development of new extensions and optimizations for modern workloads like AI and hardened implementations targeted at deep submicron processes. However, ARM has utilized its own view of how to support an AI/ML workload as it defines its roadmap for the ARM ISA. This has not always been met with acceptance by companies, as they feel there are better approaches to support AI/ML algorithms on a programmable processor than ARM is promoting.

ARM’s licensing model and vast ecosystem support have made it the dominant architecture for mobile, IoT and embedded use cases. In fact, chips containing ARM IP power most of today’s devices, used by Apple, Nvidia, Qualcomm, Mediatek, Google and many more vendors in mobile, consumer and embedded silicon products. ARM has not been adopted widely for AI/ML workloads (except in microcontrollers for tinyml use cases), but to host stacks, due to the higher computational performance and power efficiency needed to support these emerging algorithms.

Companies flock to ARM for a simple reason: Its solutions work with their software. In addition, Arm closely controls the ISA and provides Arm validation suites (AVS) to ensure that software implemented for any Arm ISA implementation is binary compatible with other Arm ISA implementations.

Meanwhile, RISC-V provides an open alternative to expensive ARM licensing models. Its flexibility and modular design allows for tailored implementations for AI, IoT and many other applications, as well as the freedom to customize these extensions for specific use cases, such as AI/ML.

RISC-V was introduced in 2010 at UC Berkeley, built on a reduced instruction set architecture. As an open ISA, RISC-V’s design emphasizes simplicity and efficiency by using a small set of simple and general-purpose instructions. Though often misconstrued as “open source,” RISC-V is an open ISA standard, meaning RISC-V International defines and manages standards that chip developers are free to implement as they choose. Therefore, the RISC-V ISA is open, and anyone can download the documentation to use for free without requesting permission or paying a fee. In turn, these developers sometimes open source their implementations of RISC-V ISA for others to utilize. This open nature encourages third party validation, as RISC-V architecture is scrutinized closely in the public domain. There is a cottage industry of IP vendors who license RTL implementations of RISC-V, leading vendors include Si-Five, Akeana, Andes and Codasip. Some RISC-V silicon vendors with RISC-V that include AI/ML extensions also offer licensable versions of their designs.

RISC-V users benefit from an active open source ecosystem providing software, tools, a community for developers using the technology and more. Unencumbered by ISA licensing fees, RISC-V offers an attractive alternative model for designing customized chips, an offer taken up by Nvidia, QCOM, Western Digital, Huawei and more. Even more telling, many AI/ML start-ups have adopted RISC-V as the core for their AI/ML compute engine. The ability to define and customize the ISA to support new sets of AI/ML algorithms is attractive, along with the freedom to innovate without being compliant to the ISA specification, as in an ARM license.

Despite the open source nature of RISC-V, lack of standardization is a recurring issue for companies implementing the architecture. As various chip developers using RISC-V create and implement a mix of open and proprietary extensions for their CPU cores, the end result are silicon chips that can run one company’s software, but fail to support another.

Using a RISC-V ISA for AI/ML designs

This backdrop shows the contrasting benefits of the ARM versus RISC-V architectures and it is increasingly clear that many AI/ML start-ups have selected RISC-V over ARM. It is worth understanding why this is the case and what is the expected outcome of this preference from RISC-V.

If we go back to the restrictive ARM license, and of course the fee structures, it is clear that RISC-V can enable more innovation in the customization and extension of the architecture to address AI/ML data types and use cases. It has been a trend that short integer, floating point and block floating point representations are being utilized to reduce compute complexity, reduce area and power dissipation while still retaining accuracy. For reference, a 32 bit floating point multiply produces a 64 bit result whereas an 8 bit integer calculation produces a 16 bit result. This means at least four-fold less memory and even less gates to produce a result, as long as the accuracy is sufficient, this is a key trade-off. As algorithms get more complex, the data types deployed must have more dynamic range, more precision and accumulation to retain the accuracy of the computation. This has driven 16 bit integer and 16 bit floating point data type representations. Many of these designs are based around RISC-V with the incorporation of custom instructions with the new data types. However enhanced, the RISC-V single instruction scalar core is not efficient or performant on AI/ML algorithms.

To address this, companies are deploying vector coprocessors to the RISC-V core and utilizing the scalar RISC-V operations for control and data management. In the case of RISC-V, many of the extensions are customized by the vendor to extract more performance outside of the datapath as loosely coupled cores. Even an enhanced vector RISC-V core in of itself is not powerful enough for leading AI/ML use cases, so the parallelism is further expanded by scaling the number of RISC-V cores utilized in a design. This scaling of RISC-V cores into a network of processors now requires scheduling across a large number of independently executing resources. This ability to customize and extend RISC-V core architecture-has been a key driver of adoption of RISC-V for AI/ML markets.

The algorithm scheduling is the achilles heel of these multi-core processor RISC-V architecture designs today, since new compilers that require extensive knowledge of the interconnect, the state of each processor node and the mapping of non-contiguous memory spaces need to be developed. This is a significant investment with a very specialized team several years to produce good results. Supporting this non-standard programming environment requires hand coding, extensive support and longer turnaround time. Since AI/ML is a rapidly evolving field, the ability to compile, not hand code an ML model, is crucial. The hardware has to be future-proof and compatible with upcoming and future ML model network architectures.

For an entire AI/ML system solution, there are additional considerations. Customer applications include video decoding/encoding, image pre/post processing and visualization. These have different sets of compute requirements, with a different coding approach and require additional twists to the architecture and new set of software libraries to support. Using RISC-V as a system host requires support for a wide variety of system software packages including embedded OS, real time OS, drivers, user stacks, security stacks, real time scheduler, I/O, video processing libraries and more. While the RISC-V ecosystem is growing rapidly, the current state of the ecosystem is not sufficient to support end-to-end customer applications.

One could design an AI/ML chip with an ARM host processor and its broad ecosystem support, controlling a RISC-V based AI/ML accelerator, but we have not seen this. It almost seems antithetical to those in the RISC-V community and would be interesting in how ARM would address this licensee. To mitigate using a RISC-V system host processor, the x86 platform becomes the host, as in the server marketplace today, providing complete system software support and the RISC-V AI/ML chip as an accelerator card. However, x86 is not as power efficient as ARM and additional power, latency and board interconnect incurs delays in processing the pipeline due to an external compute subsystem, lowering overall application performance.

The flexibility RISC-V touts is not only difficult to program, but also has significant costs in power and performance for AI/ML versus alternatives. The most efficient execution of AI/ML algorithms has concentrated on matrix multiplier arrays sometimes called tensor units or tensor processing units (TPUs). The basic premise is that the basic AI/ML kernel algorithms can be run on these compute blocks and minimize data movement and storage, which are the two largest power consumption factors. The ability to load/store is very expensive if users simply want to multiply-add. The use of TPUs with local memory and in-memory compute architectures, including analog techniques, seeks to address this by focusing on reducing data movement and storage. These in-memory AI/ML compute chips can leverage an x86 host as well, making it difficult for RISC-V based accelerator chip designs to compete against these in-memory accelerators in performance and power efficiency.

An alternative approach

It is clear that ease of software development and lowest power consumption are the two most important metrics for an AI/ML chip, especially at the edge. To achieve this, SiMa.ai designed and built a very low power highly programmable compute array that delivers industry leading fps/watt performance, with a flexible compiler that generates highly optimized machine learning code for a wide variety of ML networks combined with an ARM CPU with its broad ecosystem of tools and SW.

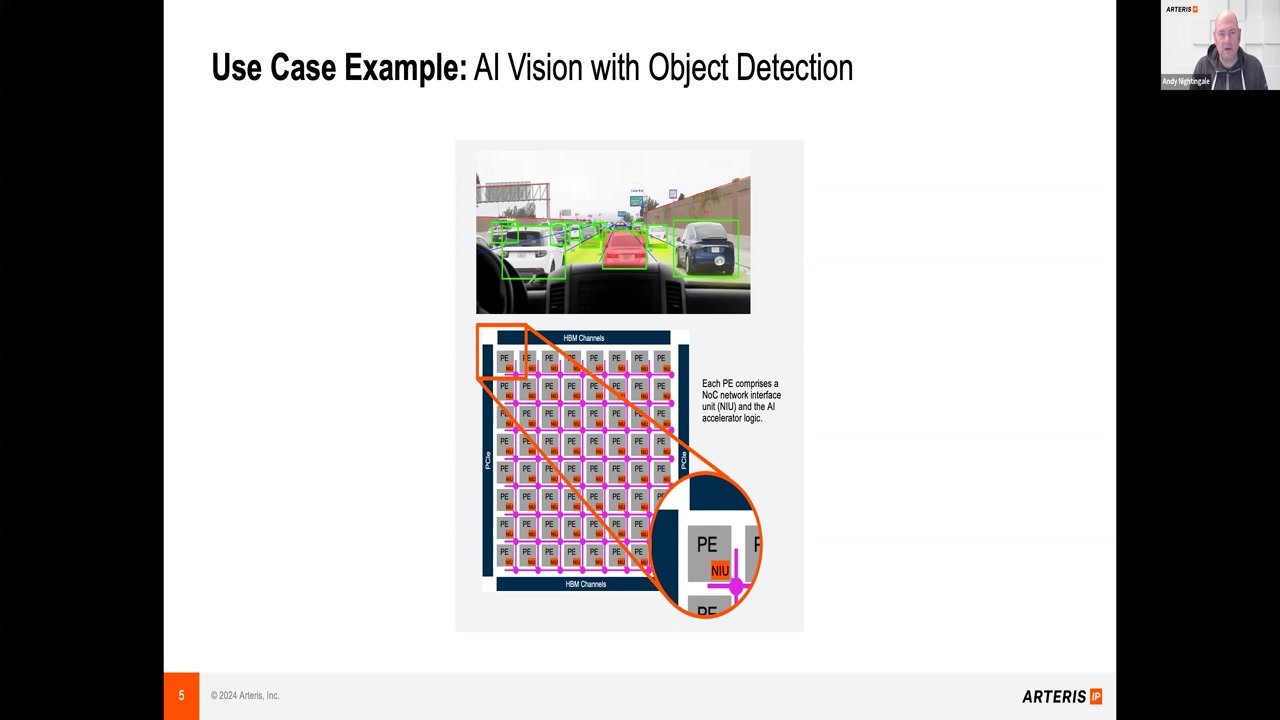

In addition, SiMa.ai’s MLSoC integrates an industry standard computer vision processor for image pre/post processing, an industry standard video encoder/decoder processor with all the necessary IOs to support a wide variety of sensors. All these building blocks are interconnected on a single die by a very high performance network on a chip fabric to ensure highly efficient processing of computer vision applications.

ARM is the clear choice from a host system perspective, since building a complete embedded system-on-chip where deployment requirements for system software security, reliability and maintainability for AI/ML designs can leverage a mature ecosystem for support. This is why the industry leaders are using ARM today and combining it with an ML power efficient compute architecture for the future.

The ARM advantage

Chip developers who want to optimize their products for customer accessibility will continue to implement ARM’s technology into their silicon ecosystems.

ARM continues to dominate mobile and embedded devices due to its maturity and software support, as demonstrated by its recent IPO. It is used by system level companies that are integrating AI and ML into their devices, as the core of its product architecture ensures reduced power consumption and extended battery operation.

RISC-V adoption continues to surge for embedded microcontrollers and is seeing more targeting for data center accelerators, fully vertically integrated systems where the software is provided by the platform vendor, HPC, as well as emerging applications in networking where customization and privacy is a priority. RISC-V is embraced by academic institutions who utilize open source implementations of the RISC-V ISA for research projects and training programs. The open architecture also gives developers the opportunity to build a platform outside of the control of a single company with potential backdoors and auditable code.

RISC-V specifically falls short in validation, regression and verification of cores when compared to ARM. As an established institution with internal structures to support continued evolution, ARM’s progression leads to subsequent generations of processor models, placing microprocessors designed with RISC-V architecture less robust than ARM’s processor models by some market observers.

ARM’s proprietary architecture and licensing fees somewhat inhibit customization opportunities and wider adoption, but with its proven track record, stability and established standards, it is still the frontrunner in ISA adoption.

ARM and RISC-V represent divergent models and philosophies, to be sure. Though RISC-V’s platform has the potential to make it the ubiquitous architecture from wearables to supercomputers, it must overcome the issues in its standardization to create a larger software ecosystem before it can gain true leeway over ARM.

ARM’s maturity, software support and licensing ecosystem give it the advantage for the foreseeable future as the dominant force behind mobile and Internet of Things devices operating at the edge.

Captions

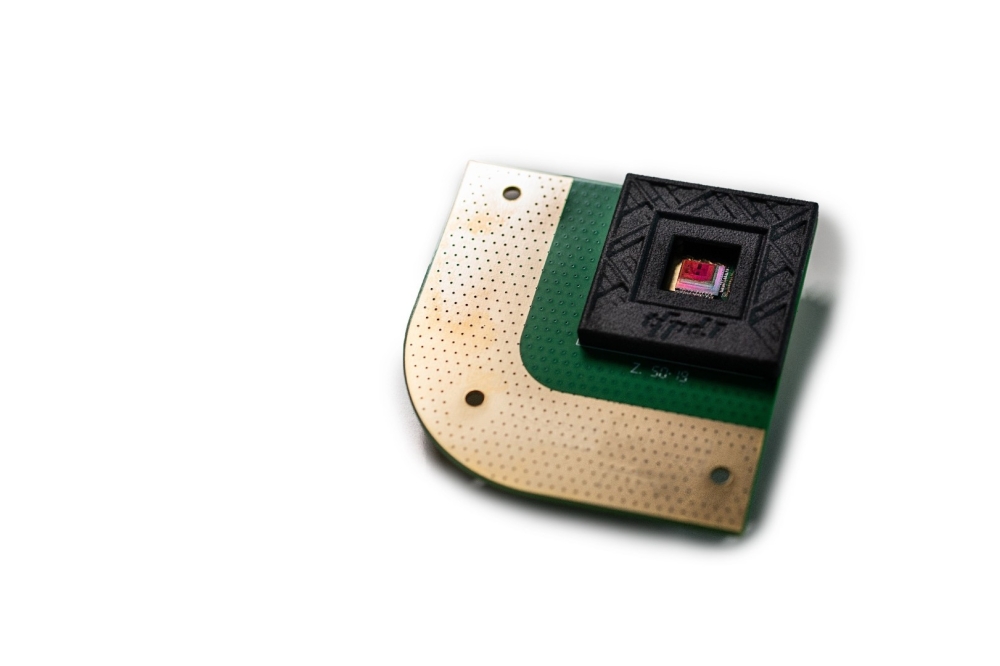

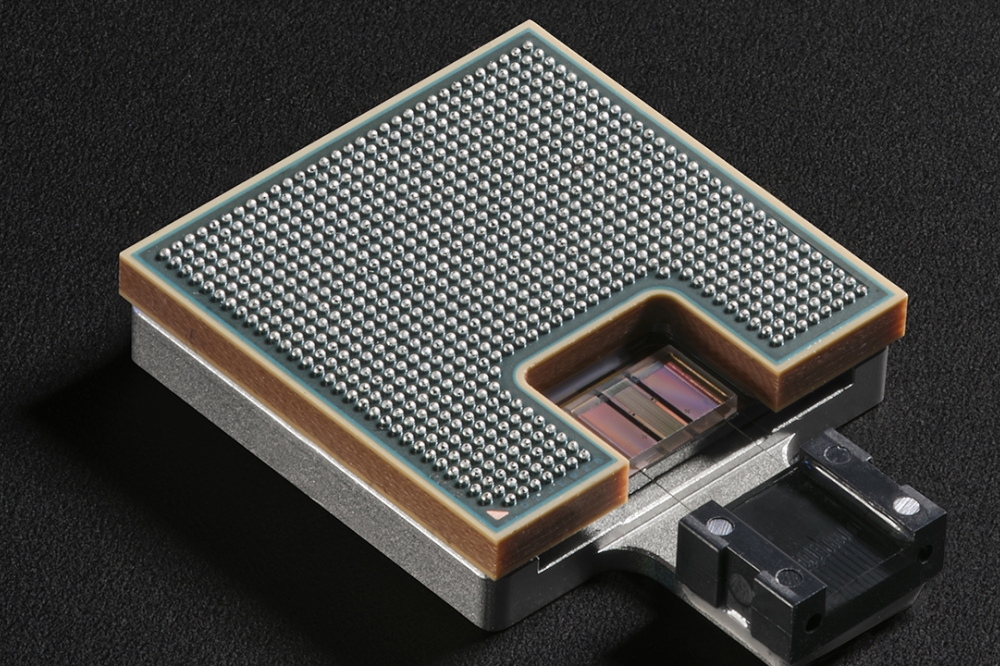

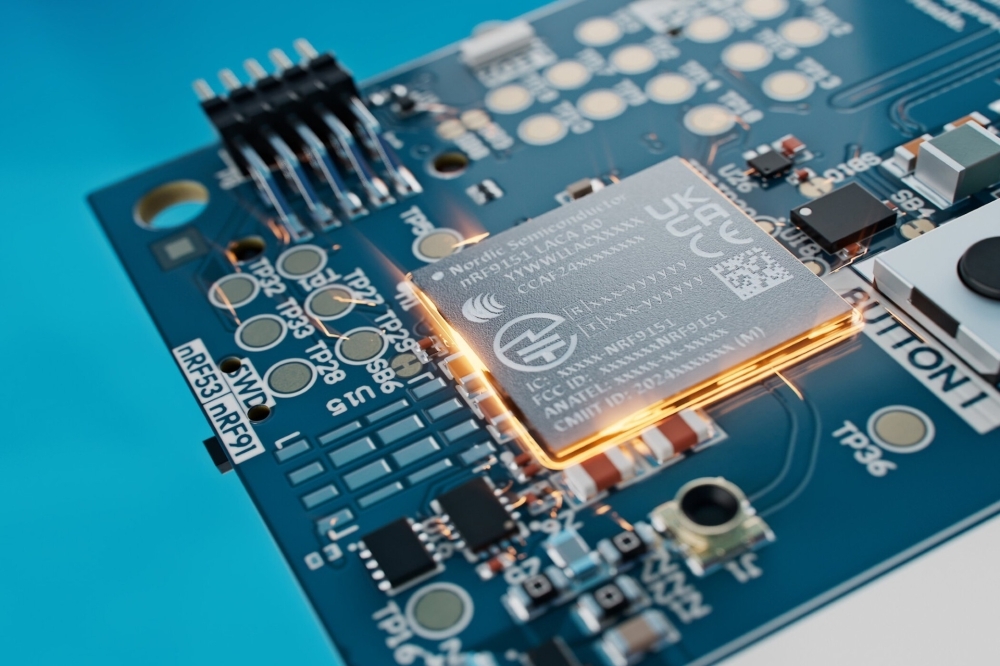

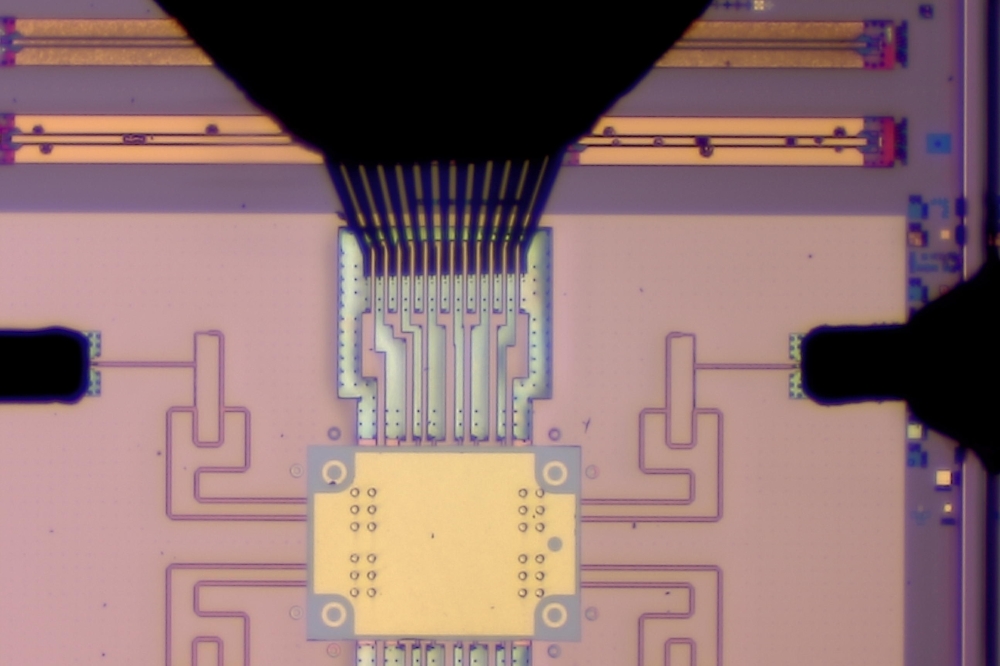

SiMa.ai’s purpose-built MLSoC™

SiMa.ai’s MLSoC™ Evaluation Board