Tech, software, and semiconductor companies face the highest AI security risk in the S&P 500

nexos.ai highlights sector-leading exposure to IP theft, insecure AI outputs, and data leakage, and outlines practical controls to protect code and chip-design intellectual property (IP).

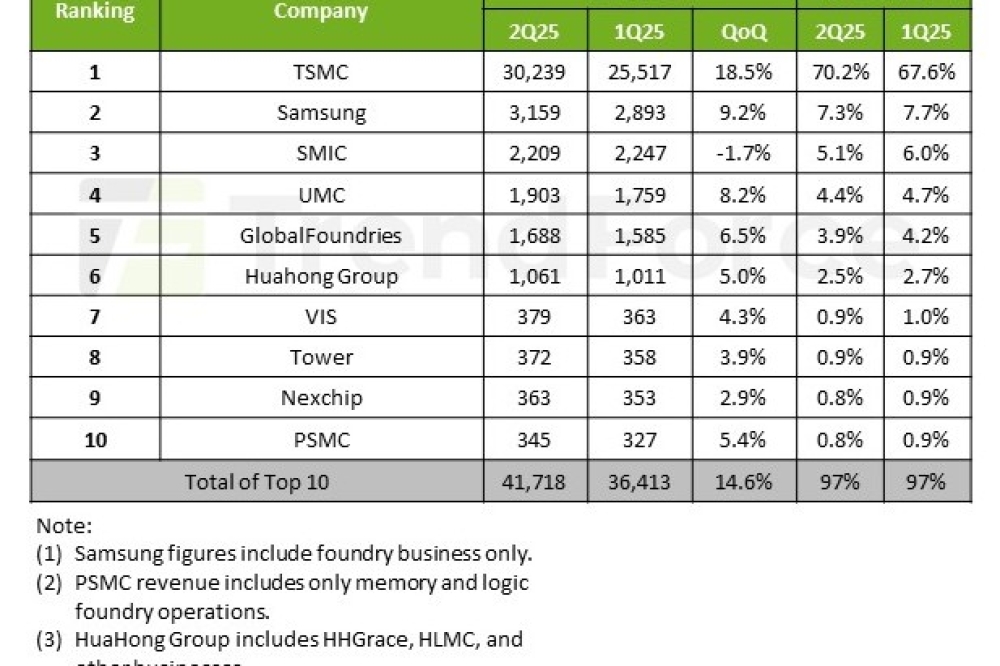

AI risk is no longer just a hypothetical concern for America’s largest companies. Autonomy Institute's new analysis shows that 3 out of 4 S&P 500 firms increased their AI risk disclosures this year, highlighting how deeply AI security issues are transforming corporate strategies. The technology, software, and semiconductor industries face the greatest exposure. According to Cybernews research, this sector alone has 202 documented AI security risks across 61 companies – the highest in the index.

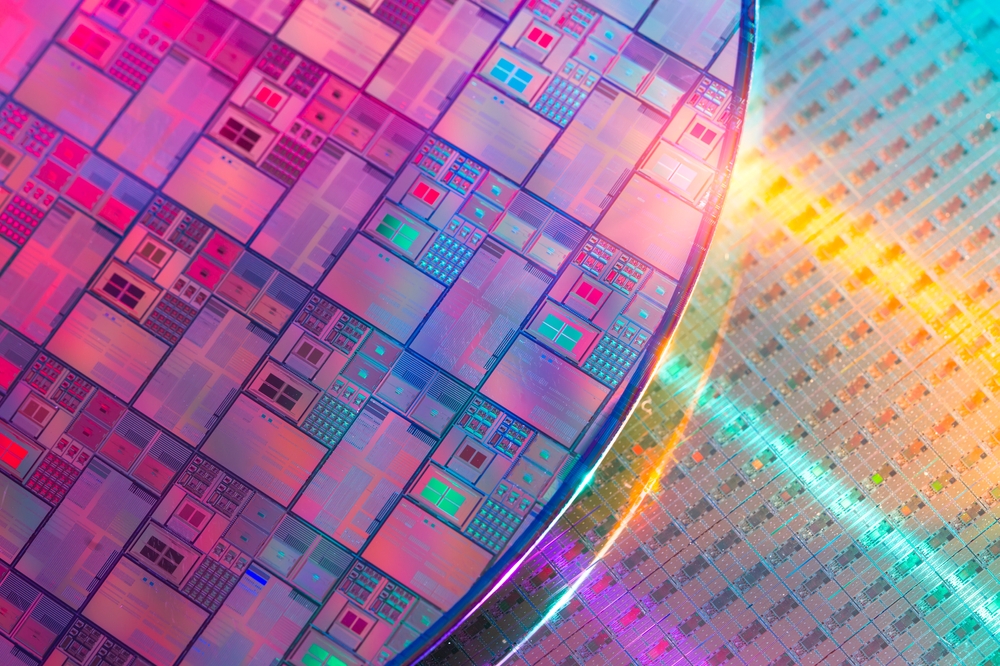

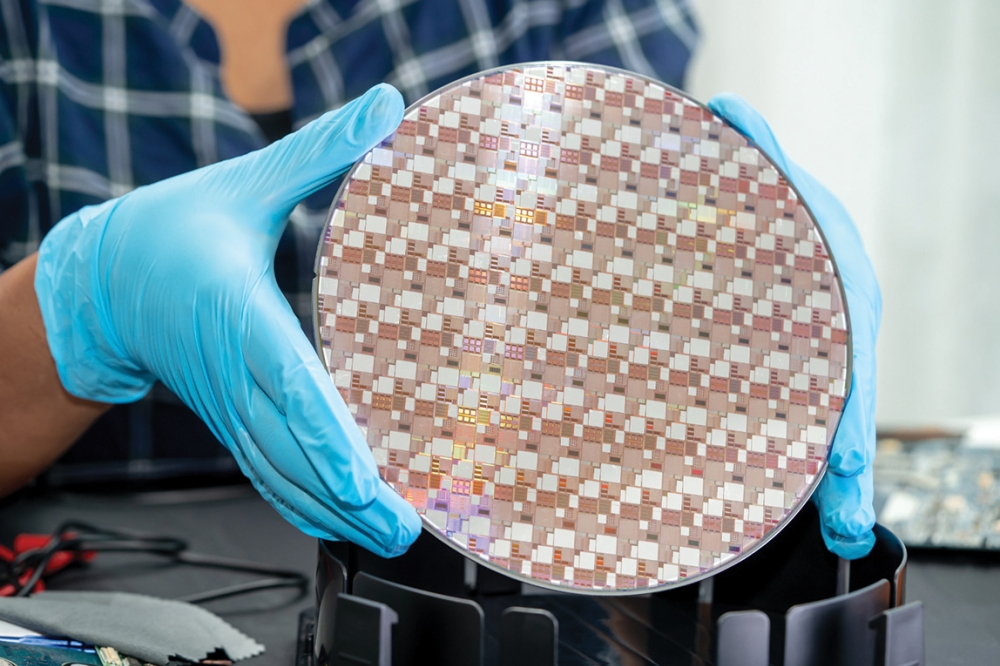

These risks include 40 flagged cases of potential intellectual property theft, 34 insecure AI outputs, and 32 instances of data leakage. The Autonomy Institute’s accompanying analysis underscores how widespread the issue is: 1 in 5 companies in the S&P 500 now list proprietary data or IP exposure as a top AI risk, and every single semiconductor company in the index updated its filings in 2025 to acknowledge significant AI threats.

Cybernews’ investigation reviewed public disclosures of AI use across 327 S&P 500 companies, finding nearly 1,000 real-world AI deployments, from internal analytics tools to customer-facing chatbots, and identifying 970 potential AI security issues in total. Each case included specific examples and was categorized into risks such as prompt injection, model extraction, and accidental data exposure.

For tech and semiconductor companies, the risk isn’t hypothetical. One malicious prompt can trick a model into revealing confidential code or unreleased design files. A continuous model-extraction attack can reconstruct algorithms and expose trade secrets. Cybernews warns that since these companies hold “a high concentration of proprietary algorithms and sensitive code,” they are “especially vulnerable to both data leaks and IP theft.”

“AI is now a core business driver. Without the right guardrails, it carries strategic risks, especially in tech and semiconductors,” says Žilvinas Girėnas, head of product at nexos.ai. “IP theft, insecure outputs, and prompt-driven leaks are no longer theoretical. The solution is proactive: policy-first design, prompt redaction at the edge, strict model access controls, and audit-ready logs. This is how companies can protect their most valuable asset, their innovation, while still moving fast with AI.”

Why it matters

For technology and semiconductor companies, intellectual property isn’t just valuable — it is the core of the business. A single leak of source code, chip schematics, or proprietary algorithms can wipe out years of competitive advantage.

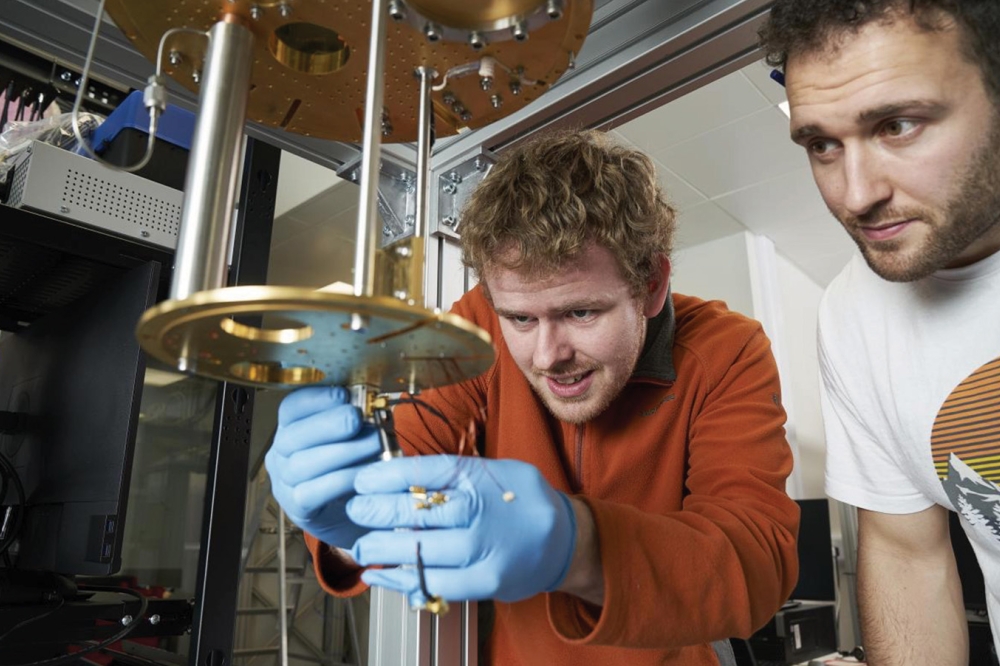

Recent incidents demonstrate how quickly things can go wrong. At Samsung, engineers pasted confidential code into ChatGPT, and that code became part of the model’s training data — leading to a corporate ban on generative AI. In 2024, an EDA (Electronic Design Automation) software company encountered a serious exposure: internal design automation prompts, used to guide the AI-assisted design of chip layouts and verification logic, were found circulating in developer forums after being entered into an unsecured third-party AI model. In multiple semiconductor companies, misconfigured AI assistants have exposed unreleased product specifications during internal testing.

These aren’t isolated mistakes. They indicate that without enforced AI policies, redaction during use, and strict model oversight, every AI query risks becoming a security breach.

“Tech companies are racing to ship AI features. That pace often skips the guardrails protecting code and designs. Centralized controls like policy, redaction, routing, and clear audit trails are the only way to keep innovation from becoming an IP liability,” says Girėnas.

Industry best practices

Girėnas recommends that organizations handling sensitive code, algorithms, or design files adopt:

Centralized policy enforcement to block risky prompts and apply consistent output filters.

Automated PII and token-level redaction to strip or mask secrets before they reach AI models.

Strict model access controls and routing to keep sensitive workloads on private or approved systems.

Comprehensive audit logs to support IP tracking, compliance, and post-incident investigation.