Innovative approaches to scaling network-on-chip architectures

The evolution of cloud computing has sparked a pressing need for advanced solutions that maximize computational efficiency while minimizing physical and energy constraints. Modern data centers depend heavily on multi-core processors, often packing over 100 cores into a single chip.

By Moritz Brunion, Researcher Design-Technology Co-optimization and James Myers, Program Director System Technology Co-optimization, both at imec.

These processors are designed to handle the growing demands of cloud-based applications by sharing network, memory, and storage resources, transforming each core into a rentable unit of processing power.

However, as processors grow more powerful, the network-on-chip (NoC) has emerged as a critical bottleneck in scaling. The NoC, tasked with routing data among CPU cores and memory, relies on metal interconnects that present unique challenges when scaled down. Higher resistance in these interconnects increases power consumption and necessitates additional signal repeaters to maintain performance over long distances.

Adding to this, the increasing core count and HD logic scaling, while maintaining the same NoC dimensions, drive a significant rise in NoC area. These factors add complexity and compromises the goal of achieving smaller and more efficient designs.

Addressing these challenges has led to a variety of innovative solutions, though none without trade-offs. 2D mesh topologies, while straightforward and scalable, struggle with communication latency as the number of cores increases.

Other approaches, such as routerless configurations or workload-specific optimizations, can improve performance but are often impractical for general-purpose systems like those used in cloud computing. While scaling the NoC link throughput by an increase in the number of signals per channel can increase the throughput, it often results in higher costs and resource demands, further complicating the NoC landscape.

In response to these constraints, imec researchers have pioneered two transformative approaches: relocating NoC channels to a dedicated die and integrating them with the backside power delivery network (BSPDN). These strategies, guided by system-technology co-optimization (STCO) principles, aim to overcome scaling limitations while balancing cost and performance. Of these, the co-integration of NoC channels with the BSPDN offers particularly promising results for future design of such high core-count systems.

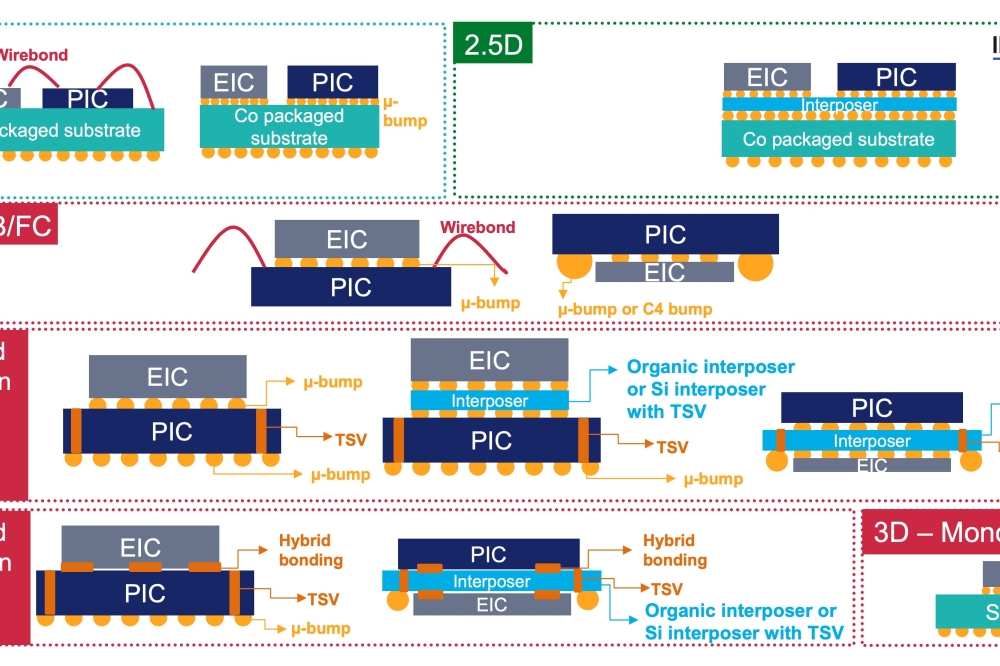

Figure 1. 2D reference system (top left) and tile (top right) with NoC

channel routes and logic within a single FEOL and BEOL stack. In the

bottom are the two new approaches depicted: (left) 3D stacking design,

showing the separation of NoC channel routes into a dedicated routing

die bonded to the A10 logic die, and (right) the backside integration

approach, showing NoC channel routes integrated into the backside metal

layers of the BSPDN.

Dedicated NoC routing on a separate die

One novel approach proposed by imec involves offloading the NoC channel routes to a dedicated die, independent of the main logic layers. Using wafer-to-wafer face-to-face hybrid bonding, this architecture can leverage the high-bandwidth data transfer in the vertical direction with minimal energy usage per bit. The NoC die can be fabricated using a less advanced manufacturing process, such as N22, significantly reducing production costs.

This design also simplifies the back-end-of-line (BEOL) configuration by using fewer metal layers, thereby optimizing functional integration efficiency. The NoC routers, which make real-time routing decisions, remain on the main logic die, ensuring that latency is kept to a minimum.

This design, however, is not without its challenges. Power distribution becomes more complicated, as the additional die requires its own power infrastructure. Physical design experiments also revealed an 8% increase in propagation delay and a 15% rise in energy per bit transferred across a channel link compared to conventional 2D NoC systems.

Backside integration with the BSPDN

A second solution involves co-integrating NoC channels with the BSPDN on the backside of the wafer. The BSPDN’s existing metal layers, optimized for power delivery with wider pitches and lower resistance, provide an ideal foundation for routing NoC channels over long distances at high clock speeds. This integration takes advantage of the relaxed design constraints of backside interconnects to achieve efficient signal transmission.

To implement this approach, the researchers made several design adjustments. First, they devised a bidirectional wiring scheme, enabling both horizontal and vertical NoC channels to share the same metal layer. This reduces the total number of required layers, lowering both costs and the overall chip footprint. Second, since BSPDN currently doesn’t support active devices on the backside, signal repeaters were placed as compact “islands” on the main logic die. These repeaters ensure robust signal strength at a small area overhead, while freeing up the channel routing footprint in the main logic die for other logic components. Finally, integrating NoC channels with the BSPDN required addressing the shared routing resources between data and power delivery, which increases the risk of IR drop and can affect performance. To mitigate these challenges, the researchers traded-off the PDN pitches and IR drop against the area overhead of the repeater islands, maintaining the benefits of the BSPDN and ensuring stable voltage levels, while also supporting reliable data transmission across the NoC.

Figure 2. (Top) The technology stack cross-section for the 2D reference

shows both NoC channels and crosspoints (XP) in the A10 logic frontend.

(Middle) The NoC routing is entirely offloaded to a dedicated die

freeing up placement and routing resources on the main logic die. In

this case, channels in the separate die are manufactured in older

technology while the crosspoints reside in the A10 logic. (Bottom) The

NoC channels sharing routing resources with the power delivery network

and repeaters located on the logic die front-end. NoC buffer islands

occupy 1-2% of a high-performance CPU core (compared to up to 10% of the

silicon area being dedicated to the NoC in the 2D reference), with the

addition of two dedicated backside metal layers for NoC channels.

Cost-benefit analysis favours NoC backside integration

When comparing the two approaches, not only their technical trade-offs but also cost-effectiveness is important to identify the best solution for growing demands. The dedicated die method offers greater flexibility and customization options but incurs higher manufacturing costs due to the need for an additional fully processed wafer. By contrast, integrating NoC channels with the BSPDN reuses existing infrastructure, adding only a few extra metal layers. This significantly reduces costs and optimizes the use of front-side silicon, making it an attractive option for applications requiring high core densities, such as cloud server CPUs.

Backside integration also excels in scalability. As core counts per processor continue to rise, the demand for wider NoC channels increases. By leveraging the backside metal layers, this approach minimizes congestion and enables higher data throughput without substantially increasing the silicon footprint. These attributes make it a compelling solution for next-generation chip architectures.

Figure 3. Estimated wafer cost ratios of different NoC integration

schemes, normalized to the 2D baseline. As the width of the NoC channel

increases, the cost advantage of backside integration becomes more

evident.

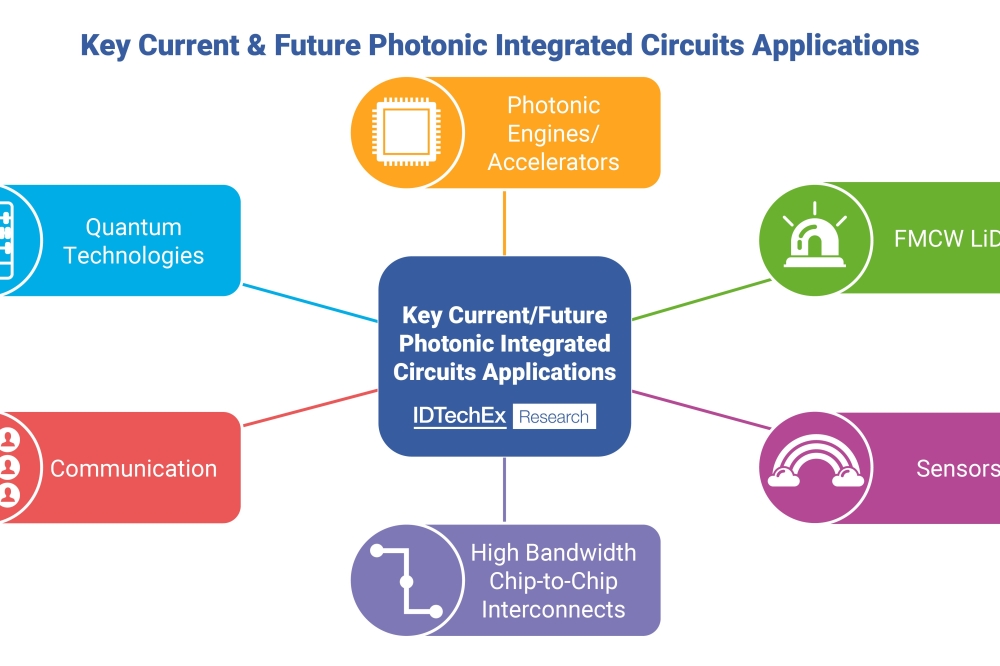

Broader implications and future directions

While backside NoC integration is highly applicable for cloud server CPUs, its relevance to other applications, such as GPUs and accelerators with coarse-grained, regular topologies, is still being explored. However, widespread adoption of this technology depends on advancements in Electronic Design Automation (EDA) tools. Currently, implementing backside NoC channels requires specialized workflows, which can be labor-intensive and costly. As EDA tools evolve, they are expected to streamline the design process, making backside integration more accessible and practical for a variety of use cases.

In conclusion, the integration of NoC channels with the BSPDN represents a significant breakthrough in chip design. By addressing routing and power delivery challenges simultaneously, this approach exemplifies the power of STCO principles. As the demand for scalable, high-performance architectures continues to grow, innovations like backside NoC integration will play a pivotal role in shaping the future of semiconductor technology and driving the next wave of computational advancements.