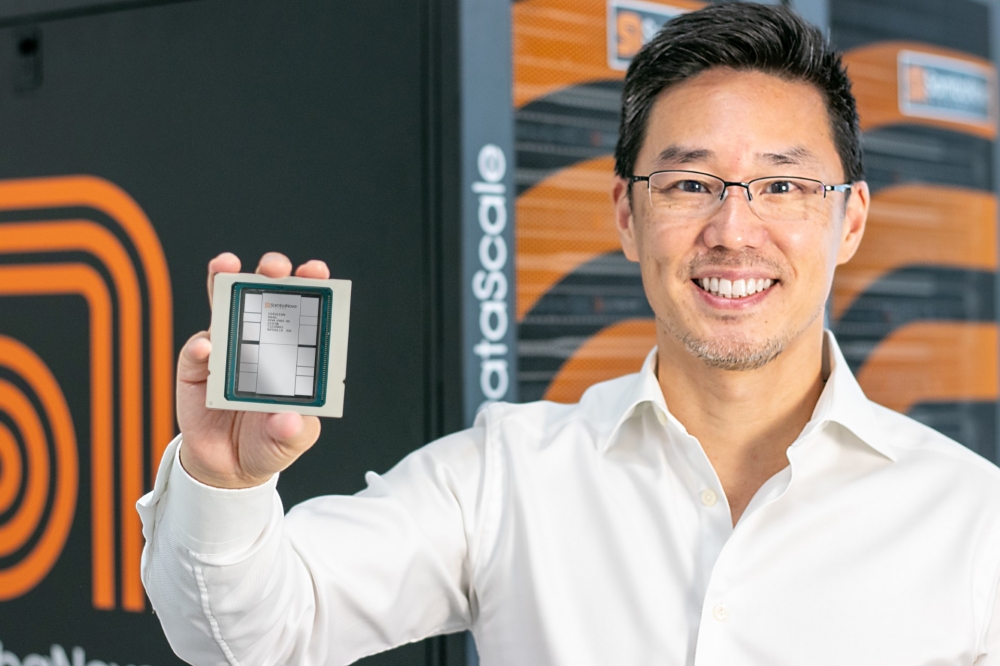

SambaNova unveils AI chip

SambaNova unveils an intelligent AI chip capable of running models up to 5 trillion parameters, enabling fast and scalable inference and training, without sacrificing model accuracy.

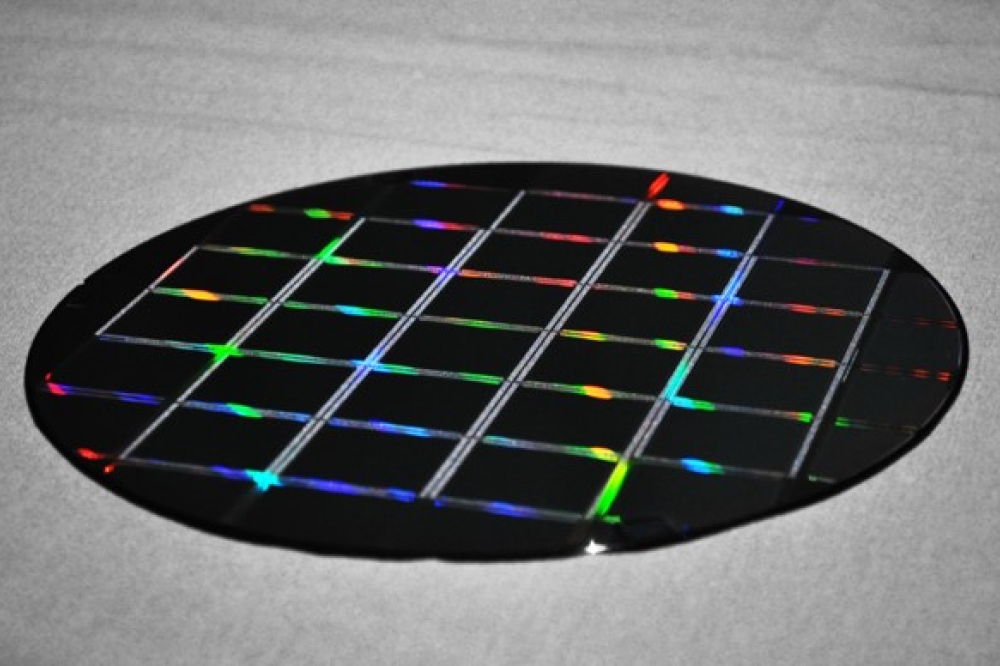

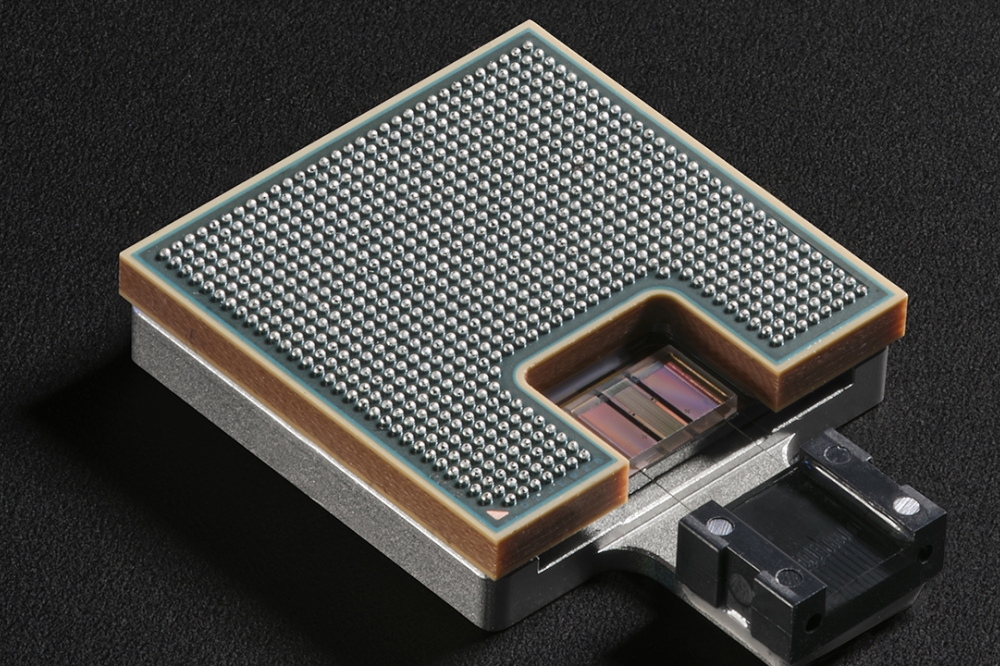

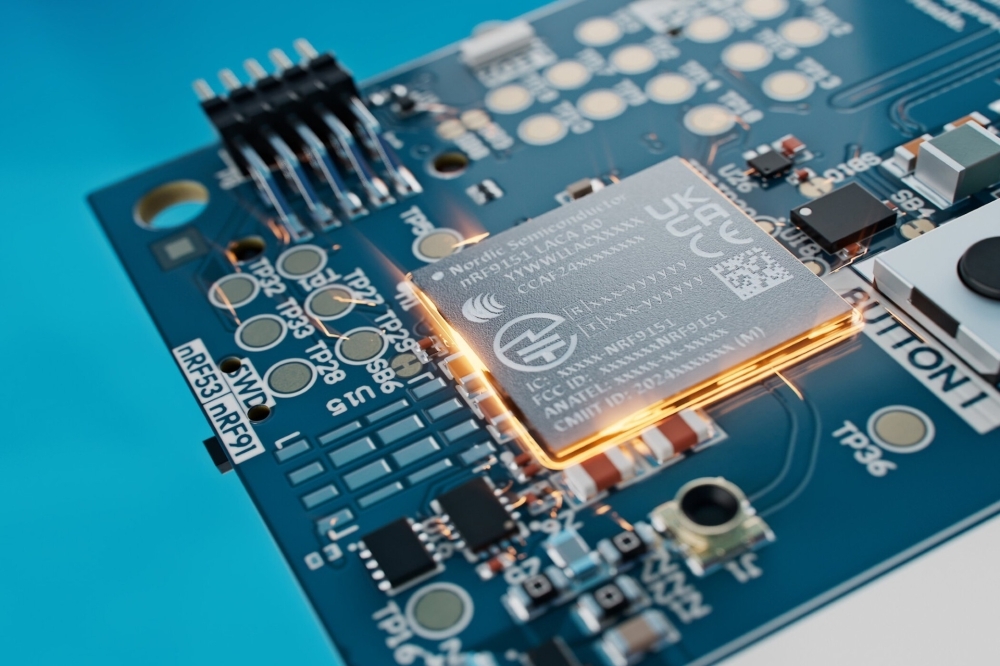

SambaNova Systems has introduced a 'revolutionary' new chip, the SN40L. The SN40L will power SambaNova’s full stack large language model (LLM) platform, the SambaNova Suite, with its new design: on the inside it offers both dense and sparse compute, and includes both large and fast memory, making it a truly “intelligent chip”.

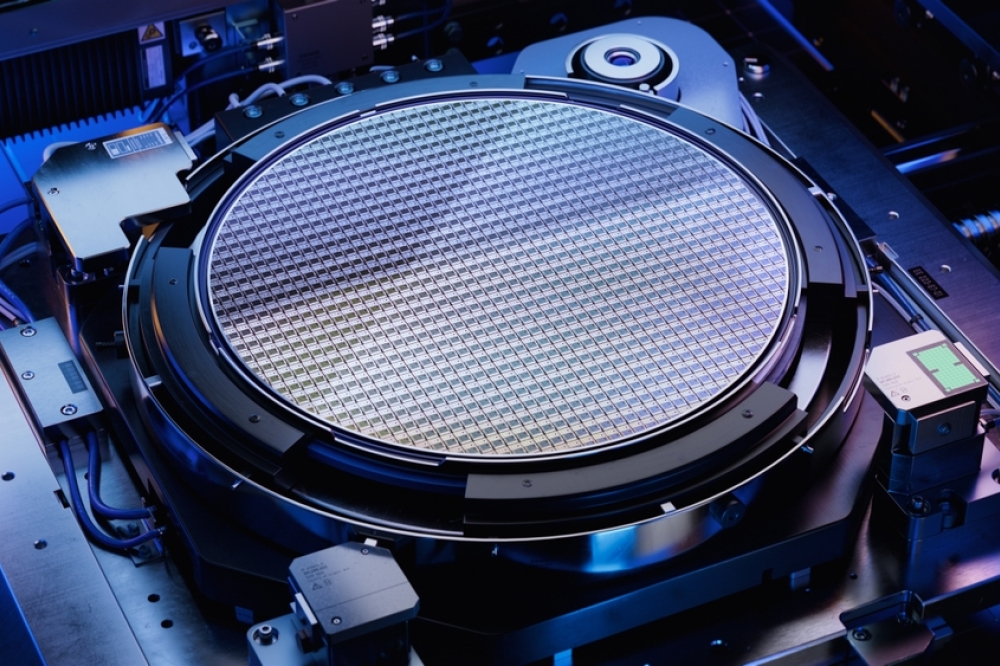

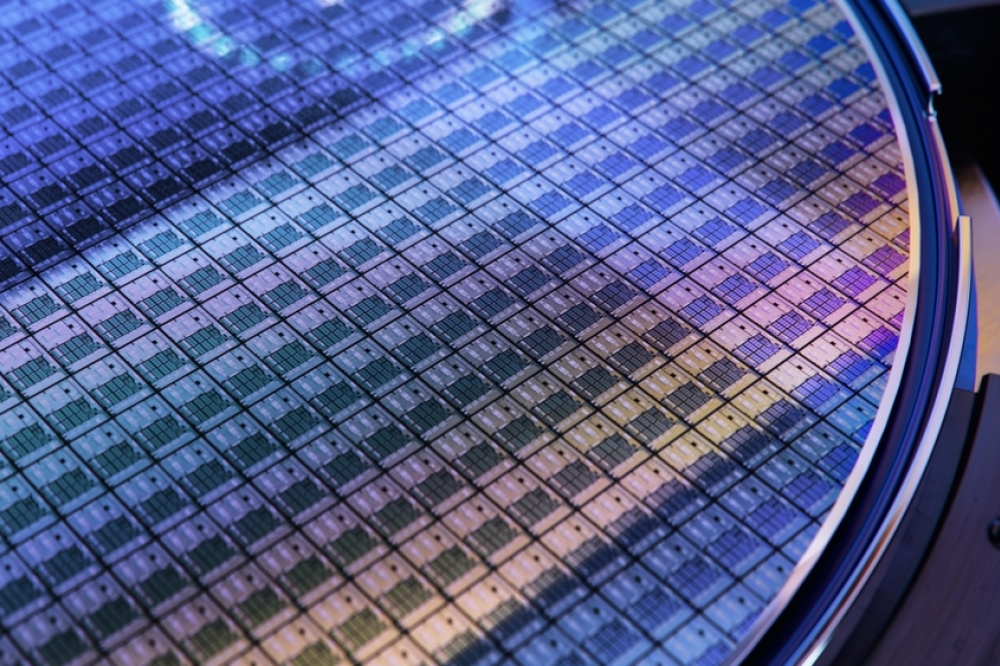

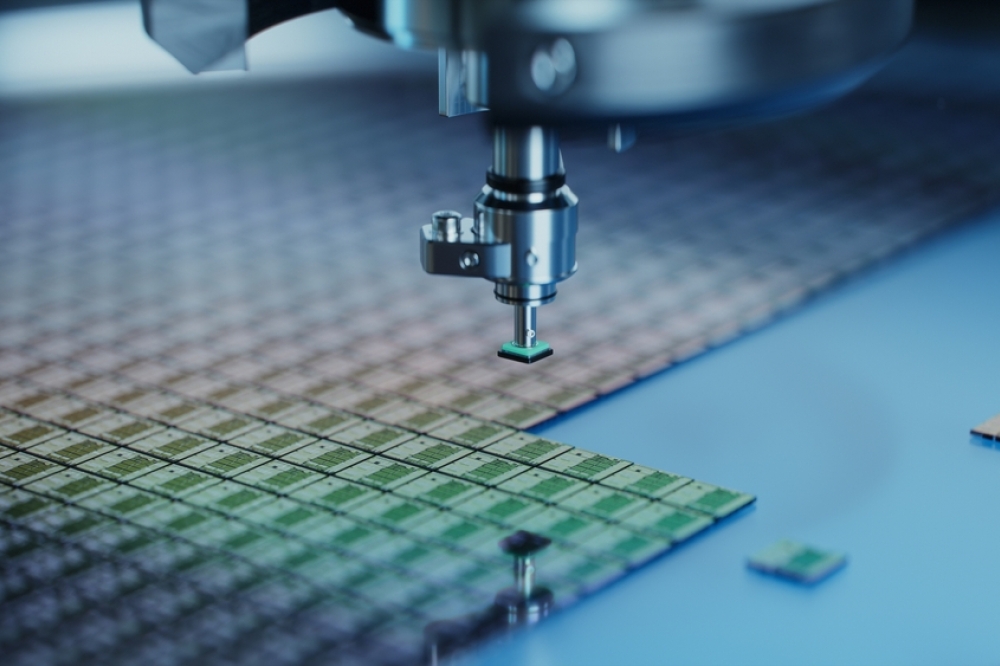

SambaNova’s SN40L, manufactured by TSMC, can serve a 5 trillion parameter model, with 256k+ sequence length possible on a single system node. This is only possible with an integrated stack, and is a vast improvement on previous state-of-the-art chips, enabling higher quality models, with faster inference and training, at a lower total cost of ownership.

"Today, SambaNova offers the only purpose-built full stack LLM platform — the SambaNova Suite — now with an intelligent AI chip; it's a game changer for the Global 2000,” said Rodrigo Liang, co-founder, and CEO of SambaNova Systems. “We’re now able to offer these two capabilities within one chip – the ability to address more memory, with the smartest compute core – enabling organizations to capitalize on the promise of pervasive AI, with their own LLMs to rival GPT4 and beyond.”

The new chip is just one element of SambaNova’s full-stack LLM platform, which solves the biggest challenges that enterprises face when deploying generative AI: “We’ve started to see a trend towards smaller models, but bigger is still better and bigger models will start to become more modular,” said Kunle Olukotun, co-founder of SambaNova Systems. “Customers are requesting an LLM with the power of a trillion-parameter model like GPT-4, but they also want the benefits of owning a model fine-tuned on their data. With the new SN40L, our most advanced AI chip to date, integrated into a full stack LLM platform, we’re giving customers the key to running the largest LLMs with higher performance for training and inference, without sacrificing model accuracy.”

Introducing SambaNova Suite, powered by the SN40L:

SambaNova’s SN40L can serve a 5 trillion parameter model, with 256k+ sequence length possible on a single system node. This enables higher quality models, with faster inference and training at a lower total cost of ownership.

Larger memory unlocks true multimodal capabilities from LLMs, enabling companies to easily search, analyze, and generate data in these modalities.

Lower total cost of ownership (TCO) for AI models due to greater efficiency in running LLM inference.

Based on six years of engineering and customer feedback, SambaNova’s team thought deeply about customers' challenges with AI implementation — the cost of training and inference, limitations on sequence length, and the speed (or latency) of LLMs — and designed the LLM platform to be fully modular and extensible. This enables customers to incrementally add modalities, and expertise in new areas, and increase the model’s parameter count (all the way up to 5T) without compromising on inference performance.

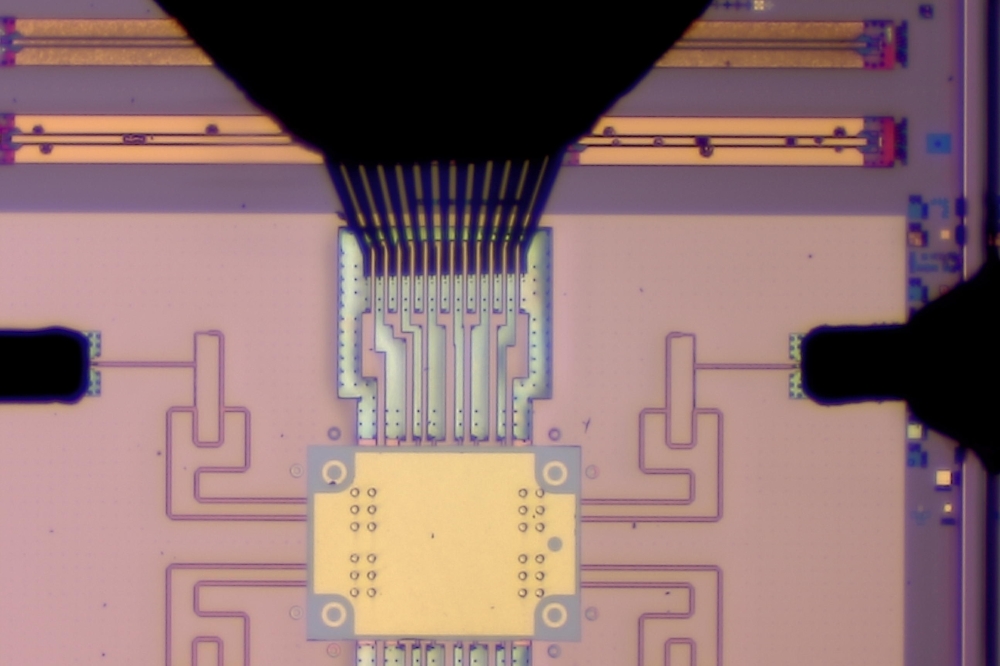

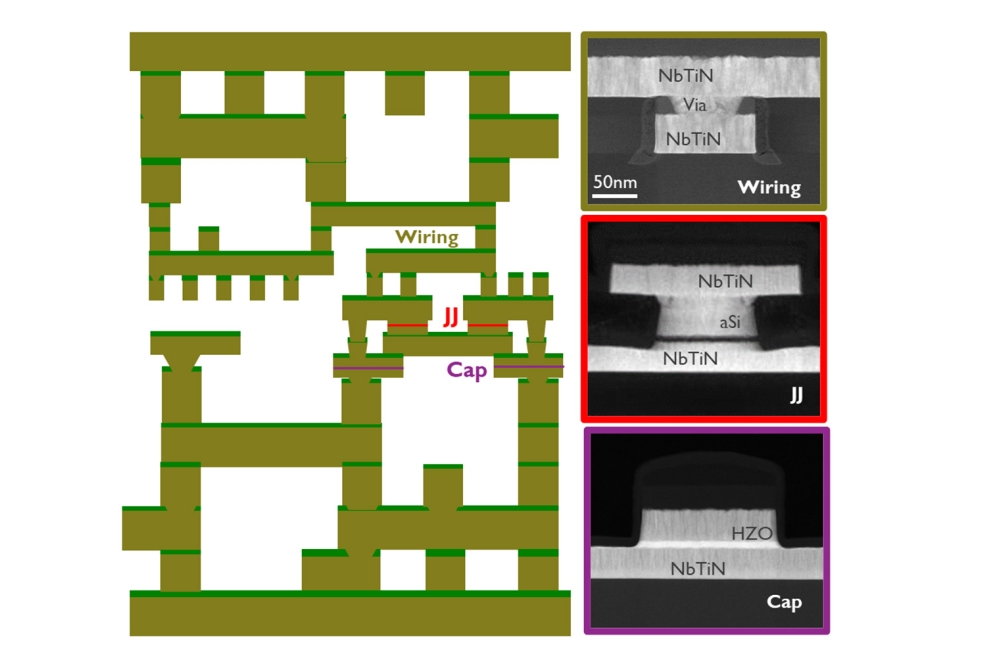

"SambaNova's SN40L chip is unique. It addresses both HBM (High Bandwidth Memory) and DRAM from a single chip, enabling AI algorithms to choose the most appropriate memory for the task at hand, giving them direct access to far larger amounts of memory than can be achieved otherwise. Plus, by using SambaNova’s RDU (Reconfigurable Data Unit) architecture, the chips are designed to efficiently run sparse models using smarter compute,” said Peter Rutten, Research Vice-President, Performance-Intensive Computing, at IDC

New models and capabilities within SambaNova Suite:

Llama2 variants (7B, 70B): state-of-the-art of open-source language models enabling customers to adapt, expand, and run the best LLM models available, while retaining ownership of these models.

BLOOM 176B: the most accurate multilingual foundation model in the open-source community, enabling customers to solve more problems with a wide variety of languages, whilst also being able to extend the model to support new, low resource languages.

A new embeddings model for vector-based retrieval augmented generation enabling customers to embed their documents into vector embeddings, which can be retrieved during the Q&A process and NOT result in hallucinations. The LLM then takes the results to analyze, extract, or summarize the information.

A world-leading automated speech recognition model to transcribe and analyze voice data.

Additional multi-modal and long sequence length capabilities.

Inference optimized systems with 3-tier Dataflow memory for uncompromised high bandwidth and high capacity.

“Recent breakthroughs have highlighted the potential for AI to rapidly improve both our lives and our businesses. While the AI hype cycle drove curiosity for AI’s potential, the Fortune 1000 is seeking more than a new capability – they are asking for predictability, dependability, and enterprise-grade availability,” said GV General Partner and SambaNova Series A Board Director Dave Munichiello. “SambaNova’s announcement today underscores the company’s commitment to providing reliable access to best-in-class open source models on top of world-class systems, putting the customer in control of their models, their data, and their compute resources.”