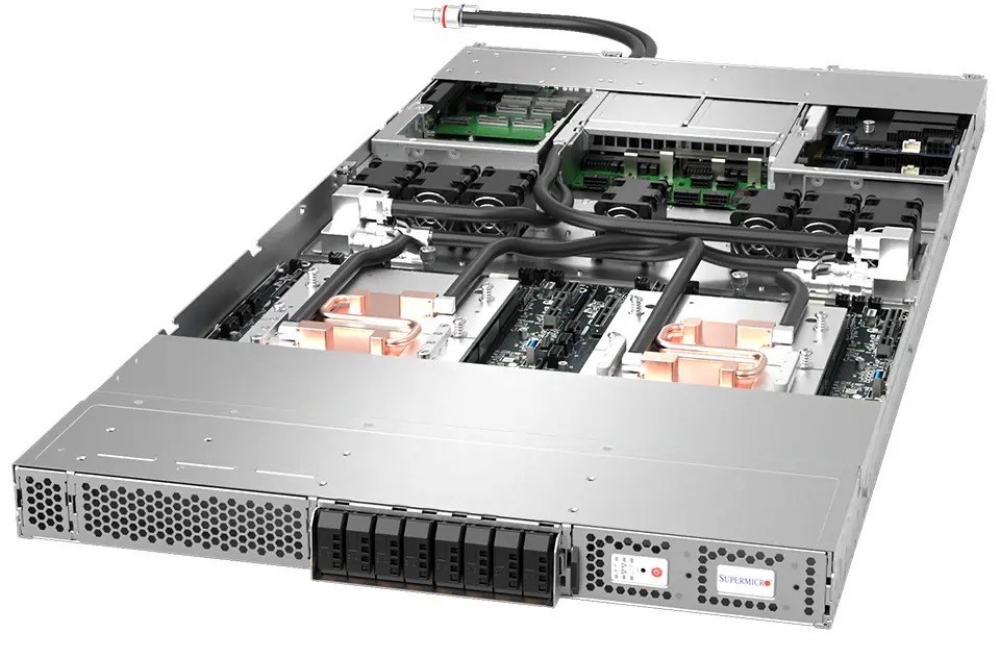

Supermicro starts superchip-based server shipments

Supermicro has introduced one of the industry’s broadest portfolios of new GPU systems based on the NVIDIA reference architecture, featuring the latest NVIDIA GH200 Grace Hopper and NVIDIA Grace CPU Superchip.

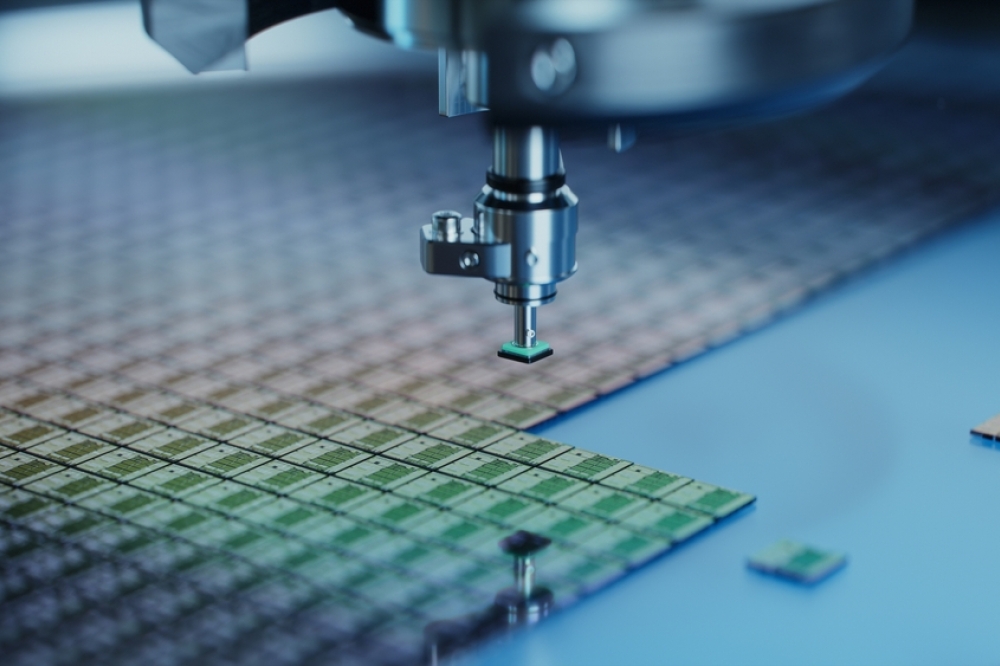

The new modular architecture is designed to standardise AI infrastructure and accelerated computing in compact 1U and 2U form factors while providing ultimate flexibility and expansion ability for current and future GPUs, DPUs, and CPUs. Supermicro’s advanced liquid-cooling technology enables very high-density configurations, such as a 1U 2-node configuration with 2 NVIDIA GH200 Grace Hopper Superchips integrated with a high-speed interconnect. Supermicro can deliver thousands of rack-scale AI servers per month from facilities worldwide and ensures Plug-and-Play compatibility.

“Supermicro is a recognised leader in driving today’s AI revolution, transforming data centers to deliver the promise of AI to many workloads,” said Charles Liang, President, and CEO of Supermicro. “It is crucial for us to bring systems that are highly modular, scalable, and universal for rapidly evolving AI technologies. Supermicro’s NVIDIA MGX-based solutions show that our building-block strategy enables us to bring the latest systems to market quickly and are the most workload-optimised in the industry. By collaborating with NVIDIA, we are helping accelerate time to market for enterprises to develop new AI-enabled applications, simplifying deployment and reducing environmental impact. The range of new servers incorporates the latest industry technology optimised for AI, including NVIDIA GH200 Grace Hopper Superchips, BlueField, and PCIe 5.0 EDSFF slots.”

“NVIDIA and Supermicro have long collaborated on some of the most performant AI systems available,” said Ian Buck, vice president of hyperscale and HPC at NVIDIA. “The NVIDIA MGX modular reference design, combined with Supermicro’s server expertise, will create new generations of AI systems that include our Grace and Grace Hopper Superchips to benefit customers and industries worldwide.”

Supermicro’s NVIDIA MGX Platform Overview

Supermicro’s NVIDIA MGX platforms are designed to deliver a range of servers that will accommodate future AI technologies. This new product line addresses AI-based servers’ unique thermal, power, and mechanical challenges.

Supermicro’s new NVIDIA MGX line of servers includes:

ARS-111GL-NHR – 1 NVIDIA GH200 Grace Hopper Superchip, Air-Cooled

ARS-111GL-NHR-LCC – 1 NVIDIA GH200 Grace Hopper Superchip, Liquid-Cooled

ARS-111GL-DHNR-LCC – 2 NVIDIA GH200 Grace Hopper Superchips, 2 Nodes, Liquid-Cooled

ARS-121L-DNR – 2 NVIDIA Grace CPU Superchips in each of 2 Nodes, 288 Cores in total

ARS-221GL-NR – 1 NVIDIA Grace CPU Superchip in 2U

SYS-221GE-NR – Dual-socket 4th Gen Intel Xeon Scalable processors with up to 4 NVIDIA H100 Tensor Core or 4 NVIDIA PCIe GPUs

Every MGX platform can be enhanced with NVIDIA BlueField®-3 DPU and/or NVIDIA ConnectX®-7 interconnects for high-performance InfiniBand or Ethernet networking.

Technical Specifications

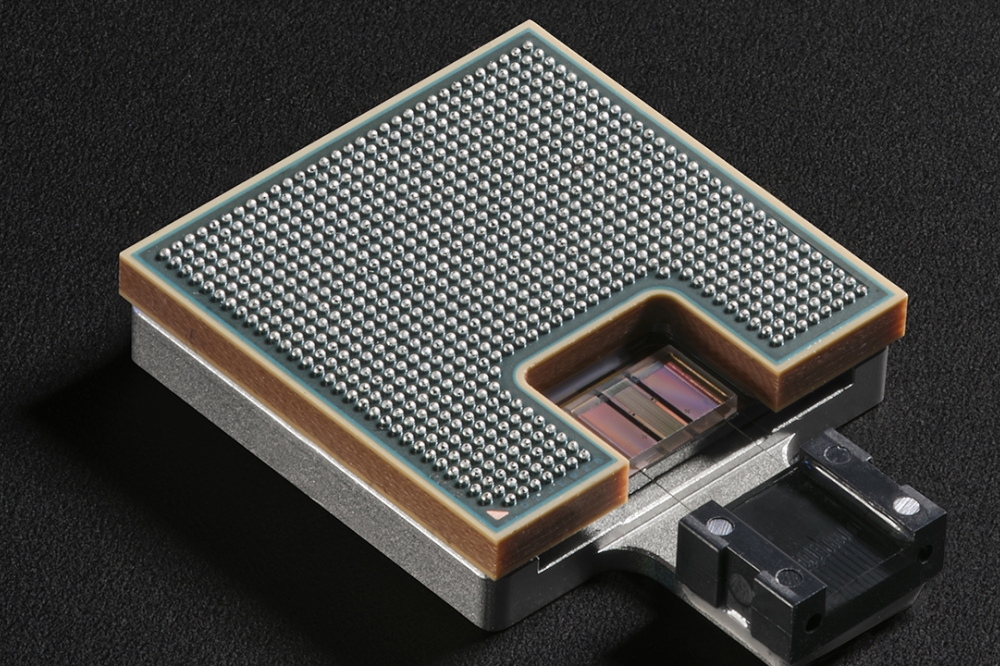

Supermicro’s 1U NVIDIA MGX systems have up to 2 NVIDIA GH200 Grace Hopper Superchips featuring 2 NVIDIA H100 GPUs and 2 NVIDIA Grace CPUs. Each comes with 480GB LPDDR5X memory for the CPU and 96GB of HBM3 or 144GB of HBM3e memory for the GPU. The memory-coherent, high-bandwidth, low-latency NVIDIA-C2C interconnects the CPU, GPU, and memory at 900GB/s — 7 times faster than PCIe 5.0. The modular architecture provides multiple PCIe 5.0 x16 FHFL slots to accommodate DPUs for cloud and data management and expandability for additional GPUs, networking, and storage.

With the 1U 2-node design featuring 2 NVIDIA GH200 Grace Hopper Superchips, Supermicro’s proven direct-to-chip liquid cooling solutions can reduce OPEX by more than 40% while increasing computing density and simplifying rack-scale deployment for large language model (LLM) clusters and HPC applications.

The 2U Supermicro NVIDIA MGX platform supports both NVIDIA Grace and x86 CPUs with up to 4 full-size data centre GPUs, such as the NVIDIA H100 PCIe, H100 NVL, or L40S. It also provides three additional PCIe 5.0 x16 slots for I/O connectivity, and eight hot-swap EDSFF storage bays.

Supermicro offers NVIDIA networking to secure and accelerate AI workloads on the MGX platform. This includes a combination of NVIDIA BlueField-3 DPUs, which provide 2x 200Gb/s connectivity for accelerating user-to-cloud and data storage access, and ConnectX-7 adapters, which provide up to 400Gb/s InfiniBand or Ethernet connectivity between GPU servers.

Developers can quickly use these new systems and NVIDIA software offerings for any industry workload. These offerings include NVIDIA AI Enterprise, enterprise-grade software that powers the NVIDIA AI platform and streamlines the development and deployment of production-ready generative AI, computer vision, speech AI, and more. Additionally, the NVIDIA HPC software development kit provides the essential tools needed to fuel the advancement of scientific computing.

Every aspect of Supermicro NVIDIA MGX systems is designed to increase efficiency, ranging from intelligent thermal design to component selection. NVIDIA Grace Superchip CPUs feature 144 cores and deliver up to 2x the performance per watt compared to today’s industry-standard x86 CPUs. Specific Supermicro NVIDIA MGX systems can be configured with two nodes in 1U, totalling 288 cores on two Grace CPU Superchips to provide ground-breaking compute densities and energy efficiency in hyperscale and edge data centres.