Intel demonstrates AI architectural expertise

At Hot Chips 2024, Intel Xeon 6 SoC and Lunar Lake processors, alongside Intel Gaudi 3 AI accelerators and optical compute interconnect, headline technical presentations.

Demonstrating the depth and breadth of its technologies at Hot Chips 2024, Intel showcased advancements across AI use cases – from the data center, cloud and network to the edge and PC – while covering the industry’s 'most advanced and first-ever' fully integrated optical compute interconnect (OCI) chiplet for high-speed AI data processing. The company also unveiled new details about the Intel® Xeon® 6 SoC (code-named Granite Rapids-D), scheduled to launch during the first half of 2025.

Pere Monclus, chief technology officer, Network and Edge Group at Intel, commented: “Across consumer and enterprise AI usages, Intel continuously delivers the platforms, systems and technologies necessary to redefine what’s possible. As AI workloads intensify, Intel’s broad industry experience enables us to understand what our customers need to drive innovation, creativity and ideal business outcomes. While more performant silicon and increased platform bandwidth are essential, Intel also knows that every workload has unique challenges: A system designed for the data center can no longer simply be repurposed for the edge. With proven expertise in systems architecture across the compute continuum, Intel is well-positioned to power the next generation of AI innovation.”

At Hot Chips 2024, Intel presented four technical papers highlighting the Intel Xeon 6 SoC, Lunar Lake client processor, Intel® Gaudi® 3 AI accelerator and the OCI chiplet.

Built for the edge: The next-generation Intel Xeon 6 SoC

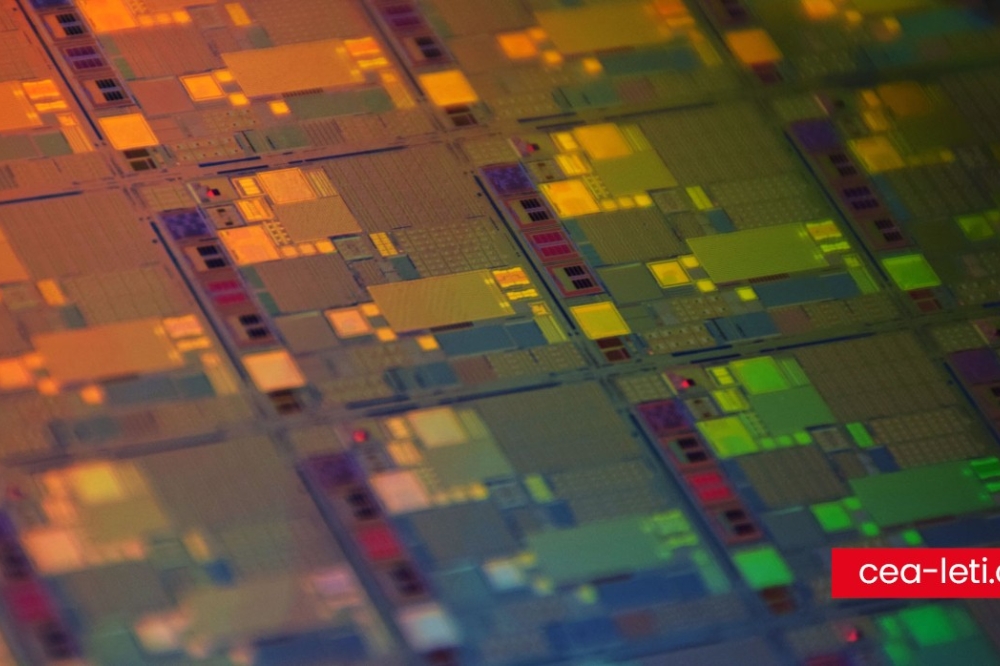

Praveen Mosur, Intel Fellow and network and edge silicon architect, unveiled new details about the Intel Xeon 6 system-on-chip (SoC) design and how it can address edge-specific use case challenges such as unreliable network connections and limited space and power. Based on knowledge gained from more than 90,0001 edge deployments worldwide, the SoC will be the company’s most edge-optimized processor to date. With the ability to scale from edge devices to edge nodes using a single-system architecture and integrated AI acceleration, enterprises can more easily, efficiently and confidentially manage the full AI workflow from data ingest to inferencing – helping to improve decision making, increase automation and deliver value to their customers.

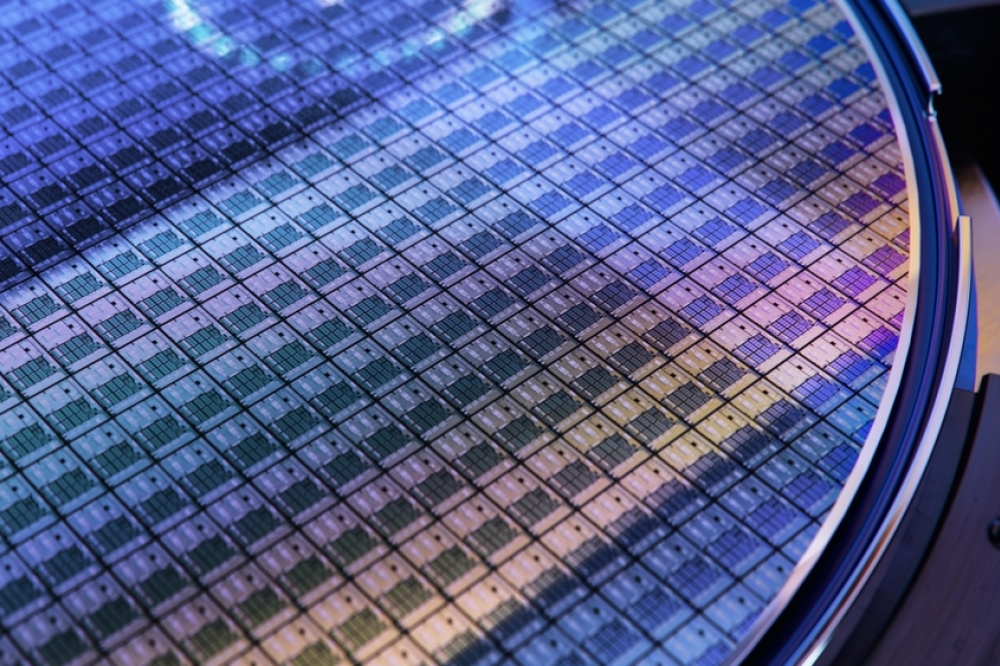

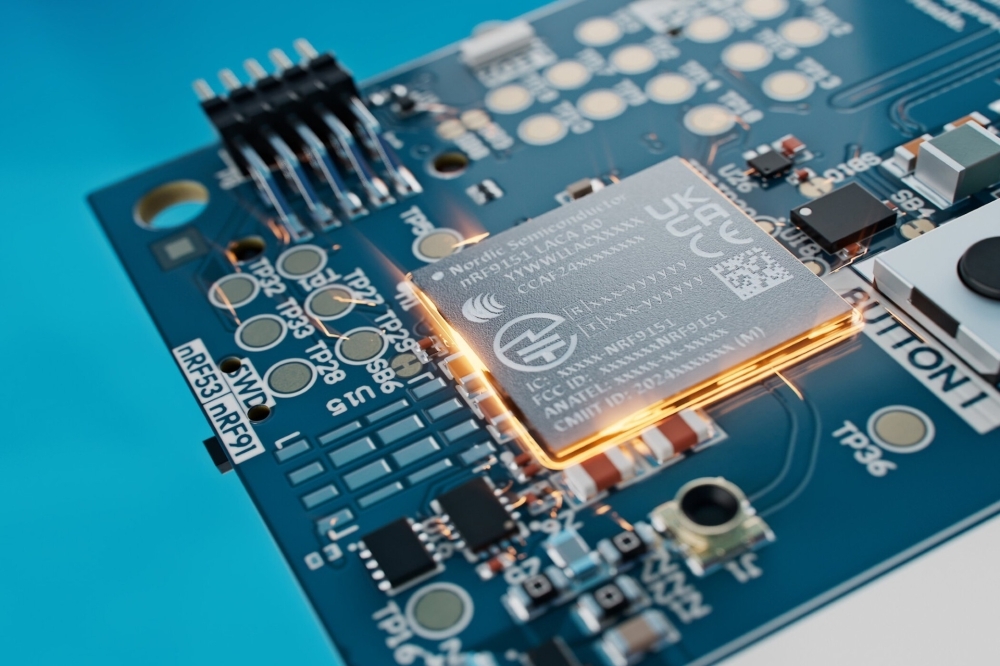

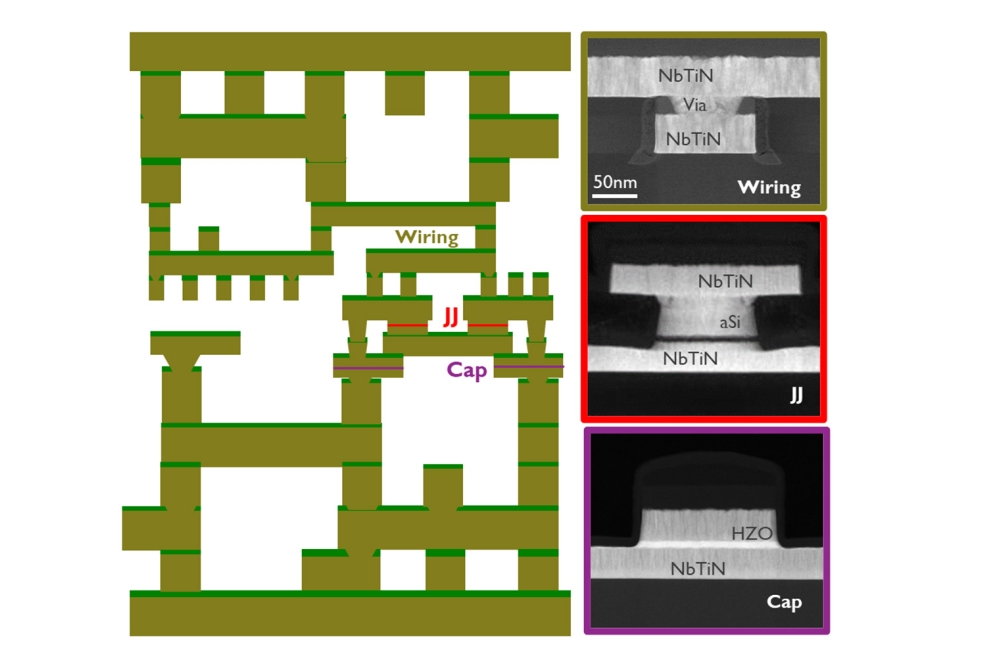

The Intel Xeon 6 SoC combines the compute chiplet from Intel Xeon 6 processors with an edge-optimized I/O chiplet built on Intel 4 process technology. This enables the SoC to deliver significant improvements in performance, power efficiency and transistor density compared to previous technologies. Additional features include:

Up to 32 lanes PCI Express (PCIe) 5.0.

Up to 16 lanes Compute Express Link (CXL) 2.0.

2x100G Ethernet.

Four and eight memory channels in compatible BGA packages.

Edge-specific enhancements, including extended operating temperature ranges and industrial-class reliability, making it ideal for high-performance rugged equipment.

Intel Xeon 6 SoC also includes features designed to increase the performance and efficiency of edge and network workloads, including new media acceleration to enhance video transcode and analytics for live OTT, VOD and broadcast media; Intel® Advanced Vector Extensions and Intel® Advanced Matrix Extensions for improved inferencing performance; Intel® QuickAssist Technology for more efficient network and storage performance; Intel® vRAN Boost for reduced power consumption for virtualized RAN; and support for Intel® Tiber™ Edge Platform, which allows users to build, deploy, run, manage, and scale edge and AI solutions on standard hardware with cloud-like simplicity.

Lunar Lake: Powering the next generation of AI PCs

Arik Gihon, lead client CPU SoC architect, discussed the Lunar Lake client processor and how it’s designed to set a new bar for x86 power efficiency while delivering leading core, graphics and client AI performance. New Performance-cores (P-cores) and Efficient-cores (E-cores) deliver amazing performance at up to 40% lower system-on-chip power compared with the previous generation. The new neural processing unit is up to 4x faster, enabling corresponding improvements in generative AI (GenAI) versus the previous generation. Additionally, the new Xe2 graphics processing unit cores improve gaming and graphics performance by 1.5x over the previous generation.

Additional details about Lunar Lake will be shared during the Intel Core Ultra launch event on September 3.

Intel Gaudi 3 AI accelerator: Architected for GenAI training and inference

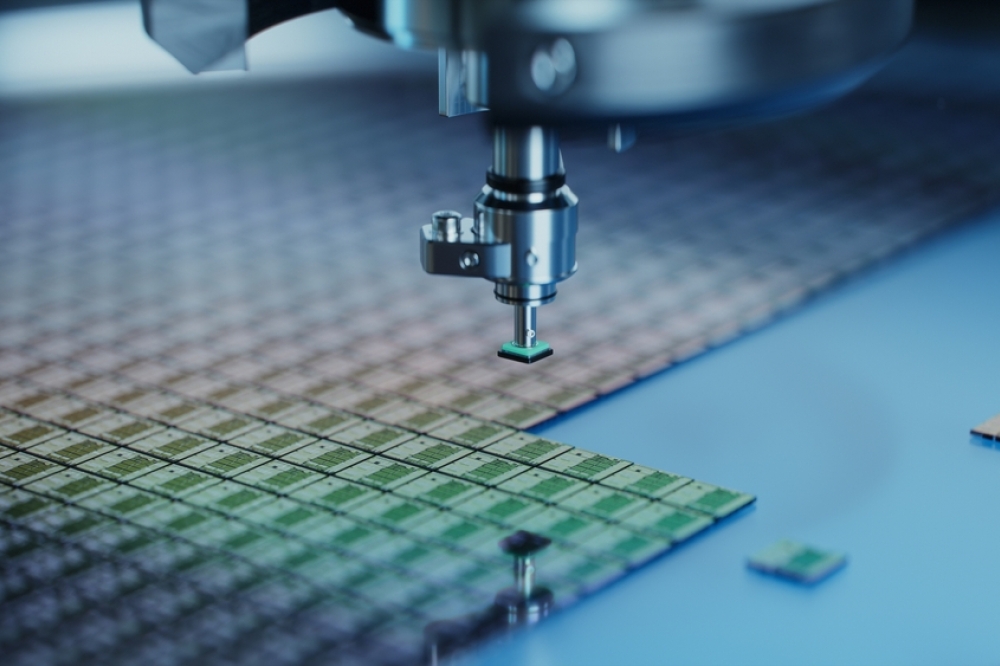

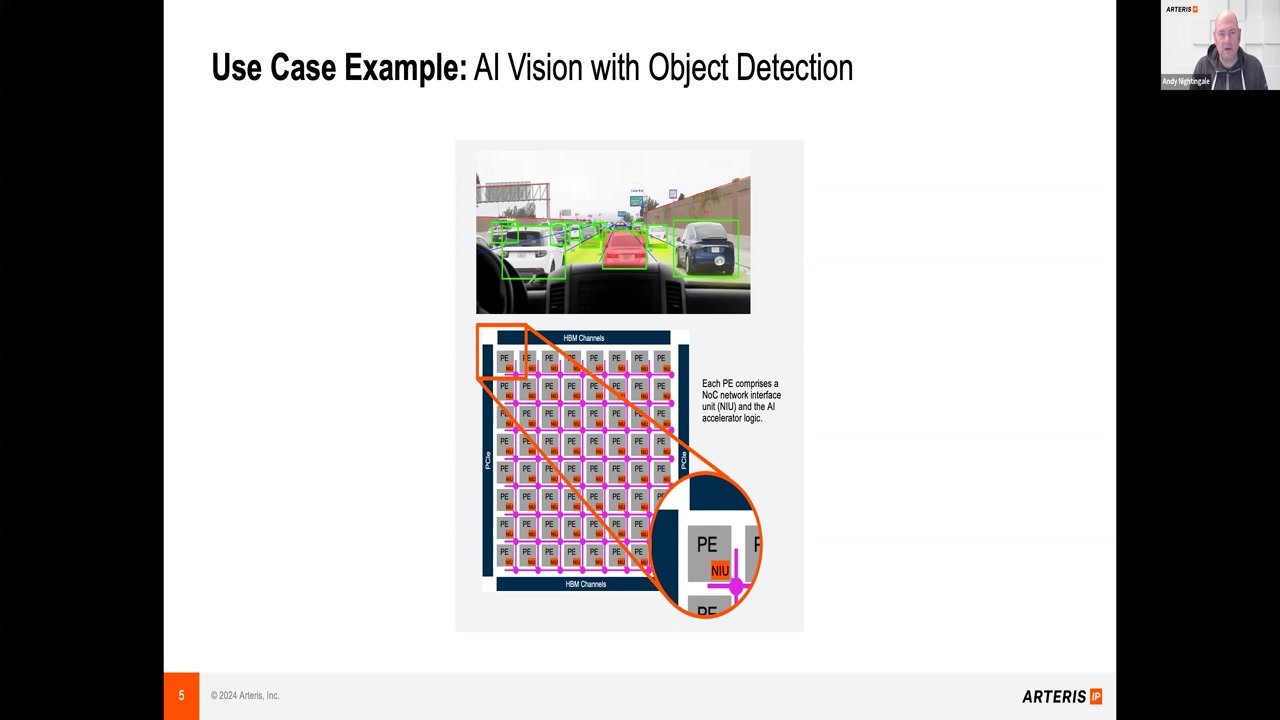

Roman Kaplan, chief architect of AI accelerators, covered training and deployment of generative AI models requiring extensive compute power. This leads to significant cost and power challenges as systems scale – stretching from single nodes to vast multi-thousand node clusters.

The Intel Gaudi 3 AI accelerator addresses these issues with its optimized architecture affecting compute, memory and networking architectures while employing strategies such as efficient matrix multiplication engines, two-level cache integration and extensive RoCE (RDMA over Converged Ethernet) networking. This allows the Gaudi 3 AI accelerator to achieve significant performance and power efficiencies that enable AI data centers to operate more cost-effectively and sustainably, addressing scalability issues when deploying GenAI workloads.

Information about Gaudi 3 AI accelerators and future Intel Xeon 6 products will be shared during a launch event in September.

4 terabits per second optical compute interconnect chiplet for XPU-to-XPU connectivity

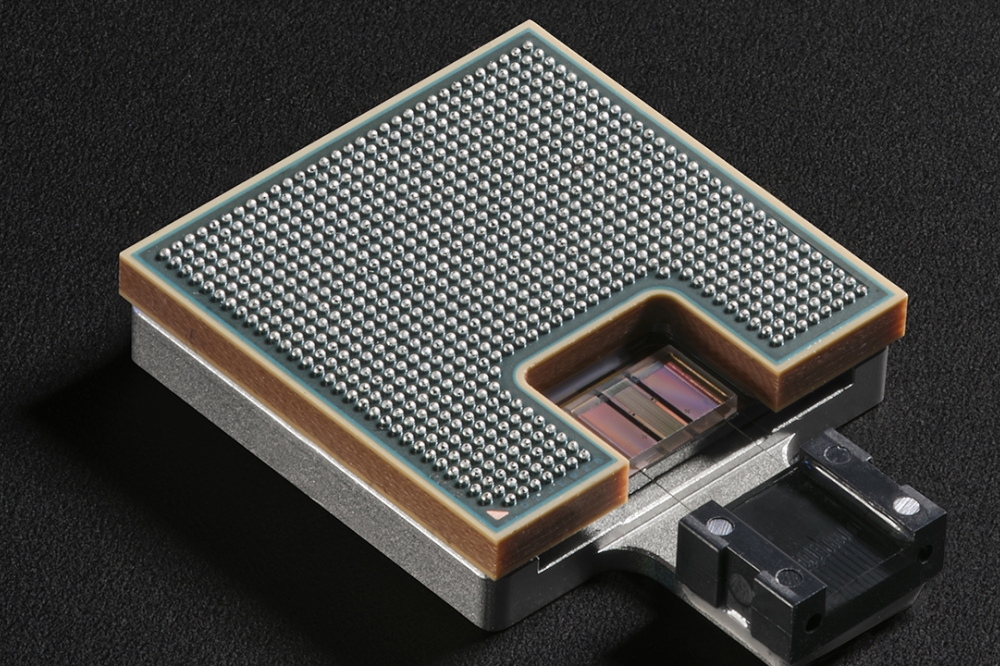

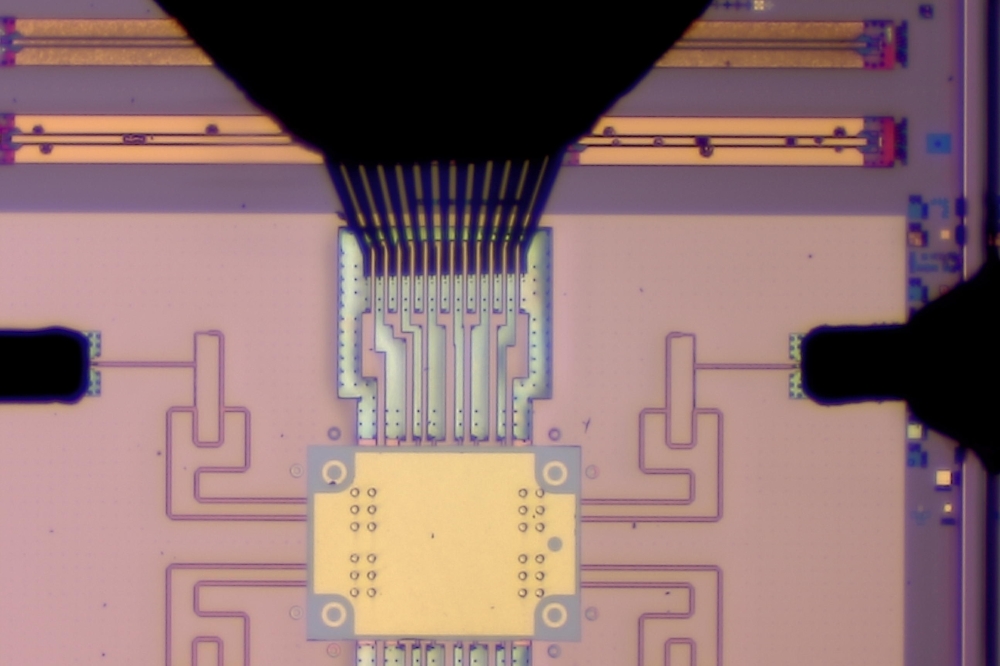

Intel’s Integrated Photonics Solutions (IPS) Group demonstrated the industry’s most advanced and first-ever fully integrated optical compute interconnect chiplet co-packaged with an Intel CPU and running live data.

Saeed Fathololoumi, photonic architect in the Integrated Photonics Solutions Group, covered the OCI chiplet and its design to support 64 channels of 32 gigabits per second (Gbps) data transmission in each direction on up to 100 meters of fiber optics. Fathololoumi also discussed how it’s expected to address AI infrastructure’s growing demands for higher bandwidth, lower power consumption and longer reach. Intel’s OCI chiplet represents a leap forward in high-bandwidth interconnect for future scalability of CPU/GPU cluster connectivity and novel computing architectures, including coherent memory expansion and resource disaggregation in emerging AI infrastructure for data centers and high performance computing (HPC) applications.

The technical deep-dive sessions at Hot Chips 2024 provided unique technical perspectives from across Intel’s product teams that bring next-generation AI technologies to market.